AI Technology Stack: Complete Guide to Build AI Solutions

Why do some companies accelerate their AI success while others struggle to scale beyond early experiments?

The answer often lies in their AI technology stack, the invisible engine that powers every stage of AI development, from data collection to model deployment.

Think of it like the engine under a high-performance car. The better it’s built, the faster and smoother your AI initiatives run. Without it, even the most innovative ideas can stall before reaching production.

According to the 2024 McKinsey Global Survey, AI adoption has jumped to 72%, up from around 50% over the past six years. That’s a clear signal: AI isn’t a trend anymore – it’s becoming a business standard. To stay ahead, building a well-structured, future-ready AI tech stack is essential.

What is an AI Tech Stack?

An AI tech stack is the complete set of tools, frameworks, and technologies used to build, train, and deploy artificial intelligence applications. It includes everything from data storage and processing tools to machine learning frameworks, cloud platforms, and deployment environments that enable AI models to operate in real-world scenarios.

In simple terms, your AI tech stack is the foundation that powers every AI system. For businesses, a well-structured AI technology stack means faster innovation, efficient automation, and better decision-making powered by data and intelligence.

Recent studies suggest that the global generative AI market could reach $1.3 trillion by 2032. This highlights a massive opportunity for growth and profit as more businesses embrace generative AI. By mastering the AI stack, you can position yourself at the forefront of this rapidly expanding industry.

Benefits of a Well-defined AI Tech Stack

In today’s AI-driven era, integrating AI into your application isn’t just an upgrade- it’s a necessity. But the success of that integration depends heavily on choosing the right AI tech stack.

A powerful, well-structured stack enables you to build scalable, secure, and high-performing AI solutions that align with your business goals. It ensures you’re not just experimenting with AI but delivering value through intelligent automation, personalized recommendations, and innovation.

Scalable Solutions

A strong tech stack enables your AI models to grow seamlessly as your business expands, maintaining performance even as data and user demands increase.

Data Security

Top-tier AI frameworks include built-in security features to protect sensitive information and ensure compliance with industry regulations.

Rapid Deployment

Efficient tools and platforms speed up the development of artificial intelligence, helping you bring new features and applications to market faster than your competitors.

Easy Integration

A compatible tech stack fits smoothly with your existing software infrastructure, reducing complexity and implementation costs.

Future Ready

Leveraging cutting-edge, evolving technologies ensures your AI investments remain relevant and adaptable to future innovations.

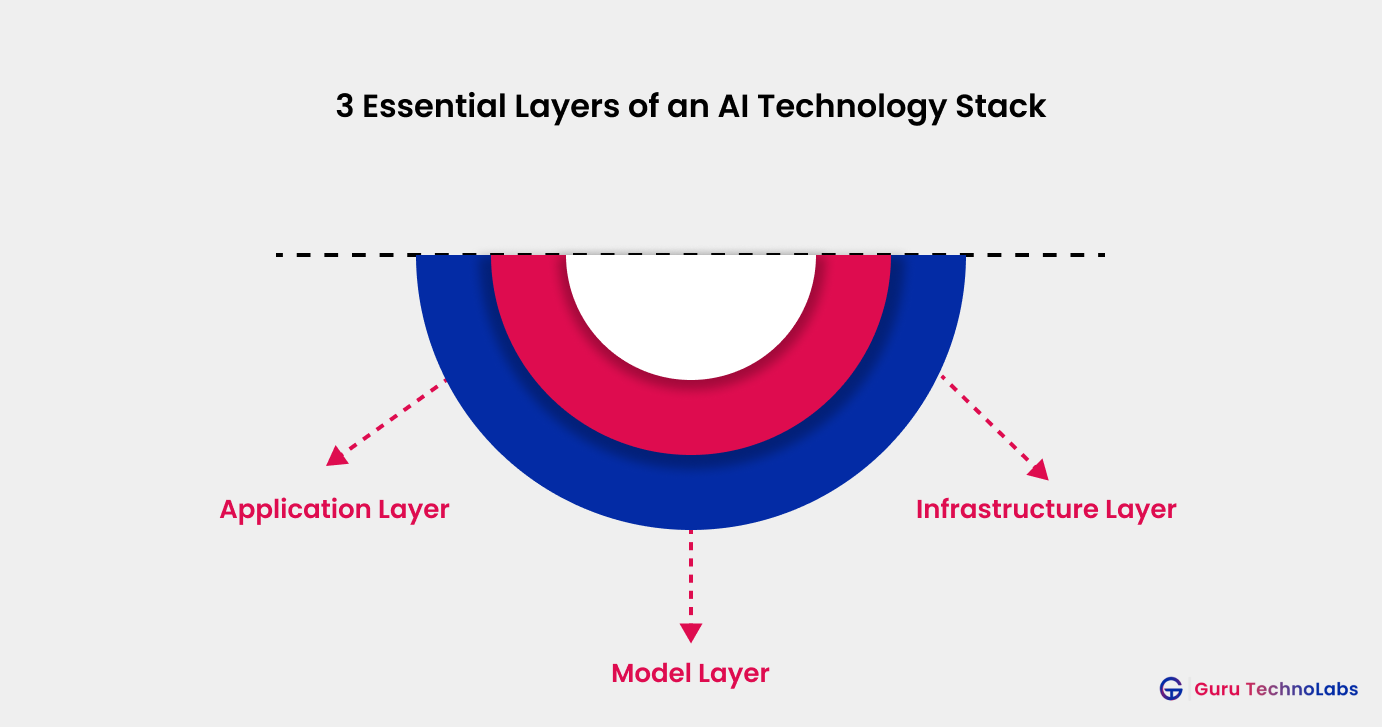

3 Essential Layers of an AI Technology Stack

An AI technology stack is built on three key layers, each playing a unique role in the design, training, and deployment of intelligent systems.

Together, these layers create the foundation that makes AI solutions smarter, faster, and ready for real-world use.

Application Layer – Where Users Meet AI

The application layer is the face of your AI system, where users interact with your product or service. It focuses on creating smooth, intuitive, and secure experiences across web, mobile, dashboards, and APIs.

This layer collects user inputs, visualizes AI-generated results, and turns complex model outputs into easy-to-understand insights.

In a typical setup, you might see a React-based frontend with a Django or Flask backend, balancing performance, scalability, and security.

Key Highlights

- Delivers a seamless and engaging user experience.

- Translates model outputs into actionable, data-driven insights.

- Ensures secure access and smooth communication between users and AI systems.

- Acts as the main entry point for AI-powered products.

Model Layer – The Intelligence Engine

The model layer is where the real intelligence happens.

After receiving data from the application layer, this part handles data processing, model training, and algorithm execution to generate predictions and insights.

Libraries such as TensorFlow, PyTorch, and Scikit-learn power this layer, enabling applications ranging from natural language processing (NLP) to computer vision and predictive analytics.

Key Highlights

- Converts raw data into meaningful insights.

- Powers tasks like speech recognition, image analysis, and forecasting.

- Uses feature engineering, fine-tuning, and optimization to improve accuracy.

- Measures success through metrics like accuracy, precision, recall, and F1-score.

Infrastructure Layer – The Powerhouse Beneath It All

The infrastructure layer is the backbone of the entire AI tech stack.

It provides the computing resources, storage, and networking power that keep your AI systems running efficiently, whether hosted on the cloud (AWS, Azure, GCP) or on-premise.

This layer also manages data pipelines and resource scaling, ensuring smooth communication among components while balancing costs and performance.

Key Highlights

- Delivers scalable storage and computing power for large AI workloads.

- Supports both cloud and on-premise environments for flexibility.

- Keeps data pipelines efficient and consistent across layers.

- Optimizes infrastructure to balance cost, speed, and reliability.

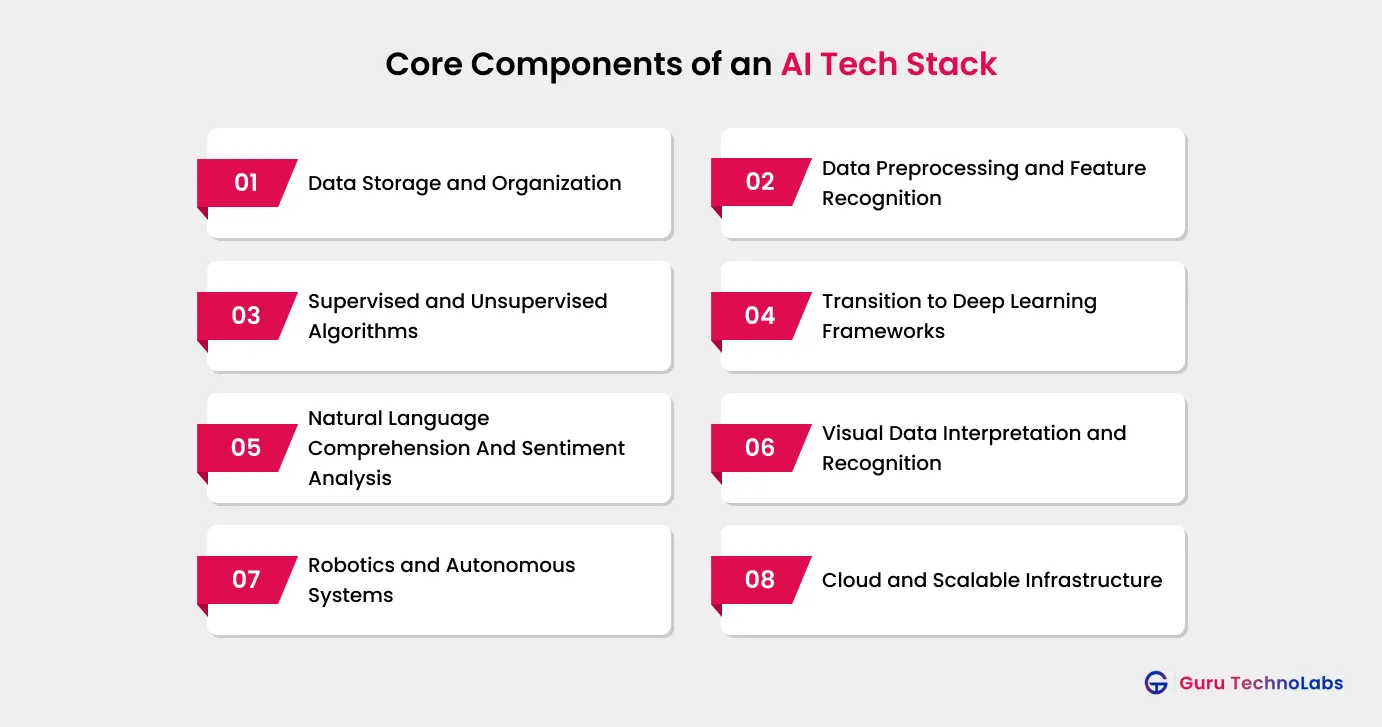

What Are the Core Components of an AI Tech Stack?

The core components of an AI tech stack are the essential building blocks that enable businesses to develop and deploy AI solutions effectively. Each element plays a specific role, from data processing and model training to infrastructure management and user interaction.

Understanding these components ensures you make the right choices for creating robust, scalable, and high-performing AI applications.

1. Data Storage and Organization

In an AI tech stack, data storage and organization are the foundation of all AI operations. It involves gathering, storing, and structuring the data AI models rely on for training, learning, and generating insights.

- Data Sources: IoT sensors, APIs, cloud apps, and user interactions.

- Storage Solutions: Relational databases, NoSQL systems, or cloud storage (e.g., AWS S3, BigQuery).

- Data Lakes & Warehouses: Lakes store raw data; warehouses store structured, query-ready data.

- Pipelines: Automated data pipelines clean, move, and prepare data for AI models.

A well-organized data layer ensures your AI models have fast, accurate access to the information they need.

2. Data Preprocessing and Feature Recognition

Following storage, the tedious work of data preparation and feature extraction begins. Normalization, missing value management, and outlier identification are part of preprocessing, which is performed in Python using packages such as Scikit-learn and Pandas.

Techniques such as Principal Component Analysis (PCA) and Feature Importance Ranking help select the most relevant inputs, thereby improving model accuracy and reducing computational load.

3. Supervised and Unsupervised Algorithms – Turning Data into Intelligence

After preprocessing, machine learning algorithms are used to identify patterns and make predictions.

- Supervised Learning: Tasks like email spam detection, price prediction, or credit scoring.

- Unsupervised Learning: Used for clustering (e.g., customer segmentation), anomaly detection, and dimensionality reduction.

- Standard Models: SVMs, Random Forest, and K-Means.

Choosing the correct algorithm determines how accurate and efficient your AI system will be.

4. Transition to Deep Learning Frameworks

For complex problems, deep learning frameworks such as TensorFlow, PyTorch, and Keras enable developers to build robust neural networks.

- CNNs (Convolutional Neural Networks): Ideal for image and video recognition.

- RNNs (Recurrent Neural Networks): Best for text, speech, or time-series data.

- GPU Acceleration: Provides faster computation for large-scale models.

These tools power high-end applications in computer vision, NLP, and autonomous systems, but require strong infrastructure and large datasets.

5. Natural Language Comprehension And Sentiment Analysis

Natural language tools allow machines to understand and respond to human language.

Libraries like NLTK and spaCy handle basic text processing, while transformer models like BERT and GPT-4 bring deeper context and intent recognition.

These models are components in AI stacks, wherever chatbots, voice assistants, or intelligent search functionalities are deployed, thanks to their ability to understand nuanced phrasing and provide coherent, context-aware responses.

Key Highlights

- Modern models use transformer architecture for context-rich understanding

- Pre-trained models drastically reduce development time

- Fine-tuning enables customization for domain-specific use cases

- NLP pipelines often integrate with other AI modules like speech and vision for multi-model applications

6. Visual Data Interpretation and Recognition

In the field of visual data, computer vision plays a vital role in enabling machines to interpret and process images and videos. Libraries like OpenCV provide powerful tools for image manipulation, object detection, facial recognition, and real-time video analysis.

When paired with Convolutional Neural Networks (CNNs), these capabilities scale to handle complex tasks such as object classification, emotion detection, and scene understanding with high accuracy.

Together, they enable multi-modal AI, where visual data is integrated with other information types like text or audio to deliver richer and more contextual insights.

Key Points

- OpenCV supports real-time processing and cross-platform deployment

- CNNs enhance accuracy in tasks like facial and object recognition

- Common use cases: AR apps, autonomous vehicles, and healthcare imaging

7. Robotics and Autonomous Systems

In real-world physics systems such as robotics, drones, and autonomous vehicles, sensor fusion techniques are critical for building a coherent understanding of the environment.

By combining data from multiple sensors, such as LiDAR, radar, GPS, and cameras, these systems can make more accurate decisions and operate safely in dynamic conditions.

Technologies like Simultaneous Localization and Mapping (SLAM) help machines map unfamiliar environments while tracking their movement, making algorithms like Monte Carlo Tree Search (MCTS) enable intelligent planning and navigation.

These components work hand in hand with machine learning and computer vision to enable AI systems to interact with the physical world reliably and adaptively.

8. Cloud and Scalable Infrastructure

AI tech stacks commonly rely on cloud platforms such as AWS, Google Cloud, and Microsoft Azure, which provide the scalable infrastructure needed to support modern AI workloads.

These platforms offer on-demand computing power, vast data storage capabilities, and integrated tools for processing and deploying machine learning models.

As the foundational layer, they ensure that all components of the AI stack, from data ingestion to real-time inference, operate smoothly and efficiently.

Top AI Frameworks for Developing Modern Applications

Modern AI applications are built on powerful frameworks that streamline the training, deployment, and scaling of machine learning models. These frameworks lower the entry barrier for developers and researchers alike, offering specialized tools for everything from experimental research to production-grade deployment.

PyTorch

Developed by Facebook’s AI research lab, PyTorch stands out with its dynamic computation graphs and intuitive interface, making it ideal for rapid prototyping and academic research.

TensorFlow

TensorFlow, developed by Google Brain, is renowned for its scalability, robust deployment tools, and support for distributed training across CPU- or GPU-based clusters.

Keras

Keras offers a user-friendly, modular API that builds on TensorFlow’s foundation, simplifying the process of defining and training deep learning models.

Hugging Face Transformers

Hugging Face Transformers democratize access to state-of-the-art pre-trained models for natural language tasks, from translation to summarization and question answering.

JAX

JAX focuses on high-performance numerical computing with automatic differentiation, enabling fast experimentation with functional programming paradigms.

Caffe

Caffe specializes in computer vision applications, offering a concise, expressive architecture that excels in model deployment and efficient inference.

PaddlePaddle

Developed by Baidu, this framework is a full-stack, industrial-scale platform supporting end-to-end model development, training, and deployment. Its pre-built models and tools are widely used in Chinese tech ecosystems for both research and commercial applications.

How to Choose the Right AI Technology Stack?

Selecting the right AI technology stack is crucial for building efficient, scalable, and sustainable AI solutions that truly align with your organization’s vision. A well-chosen stack accelerates development, ensures seamless integration, and supports future growth as the AI application evolves.

Below are the key considerations to guide your selection process:

Define Business Goals and Use Cases

Clearly articulate your business objectives and identify the specific AI problems you intend to solve. Align your tech stack choices with these goals to ensure every component drives tangible value.

Assess Team Skills and Resources

Evaluate your team’s technical expertise and available resources, from developers to infrastructure. Choose tools that match your team’s proficiency and can be maintained in the long term. Hire dedicated developers if needed to strengthen your team.

Consider Integration and Compatibility

Prioritize solutions that integrate smoothly with your existing systems and data sources to avoid disruptions and maximize efficiency.

Evaluate Scalability and Performance

Ensure the stack can scale with your business, handling growing data volumes and more complex workloads without performance degradation.

Review Security and Compliance

Verify that the stack meets industry security standards and regulatory requirements, especially for sensitive or regulated data.

Analyze Cost and Licensing

Compare open-source and proprietary options to balance affordability with the functionality and support you need.

Consult Experts If Needed

Engage with AI development partners like Guru TechnoLabs, industry specialists for tailored advice, especially for complex or critical deployments.

Start Your AI Journey with Guru TechnoLabs

AI is no longer just a buzzword; it’s the driving force redefining industries and transforming global infrastructure. As AI-powered technologies rapidly advance, we’re witnessing a true game-changer in business innovation. No wonder AI-driven tools are skyrocketing across every sector.

While it’s tough to predict precisely where AI will peak, one thing is clear – the next five years will be pivotal. The evolution of AI applications will shape the future of global commerce for decades, bringing possibilities that are either incredibly promising or downright disruptive.

So why sit on the sidelines? Start small, think big, and let Guru TechnoLabs be your partner in leveraging AI to change your business and achieve significant success.

Frequently Asked Questions

The AI tech stack refers to a structured set of interoperable tools, technologies, and frameworks used to design, build, and deploy AI-powered applications efficiently.

Commonly, tools such as Apache Spark and Hadoop are included for efficient data processing, transformation, and analysis, thereby boosting the stack’s overall analytical and visualization capabilities.

Absolutely - no-code tools like Google AutoML and DataRobot allow users to build and deploy AI models with intuitive interfaces, no programming required.

Collaboration-focused platforms like Zed, Replit, and Sourcegraph enable cloud-based coding, real-time teamwork, and smarter code navigation, streamlining AI development workflows.